A Free Guide to Data Center Power

The term “data center power” refers to the infrastructure, systems, and processes used to provide and manage power in a data center. This includes power supply distribution, backup systems, and management tools to ensure that the data center can operate continuously and effectively without any interruptions.

It’s pretty obvious that an adequate supply of high-quality power is critical to ensuring the availability and reliability of the data center’s IT equipment and services. Data center power depends equally on two fundamental pillars: power infrastructure and power management. Each discipline has its own setup planning considerations, procedures, configurations, and best practices.

In this article, we take a detailed look at various aspects of power in data centers. This includes a look at power usage, power efficiency metrics, power infrastructure, management, cost considerations, standards, and much more.

Key data center power metrics concepts

| Power density | The amount of power that can be supplied to a specific area or rack within the data center. |

| Power capacity | The maximum amount of power that a data center can supply to all its equipment without causing an overload or failure. |

| Redundancy | The duplication of critical power infrastructure components and systems to ensure the uninterrupted supply of power in the event of a failure or outage. |

| Power efficiency | A measure of how efficiently power is utilized within the data center infrastructure. Improving efficiency involves minimizing power waste and maximizing the utilization of electrical energy for productive purposes. |

| Power distribution | The process of distributing power to the IT equipment within the data center. |

| Power monitoring | The tools and systems used to monitor power usage, temperature, and humidity levels within the data center to prevent power failures or outages. |

| Power management | The process of managing power usage, load balancing, and other aspects of power distribution to optimize efficiency. |

Key performance indicators (KPIs) used to measure power efficiency

| Power usage effectiveness (PUE) | A metric that measures the total power usage of the data center and compares it to the power used by IT equipment. |

| Energy efficiency ratio (EER) | The ratio of cooling output to power input; a higher EER indicates better cooling efficiency. |

| Reliability | A general measure of the probability of a component or system operating according to specifications during a particular time interval. |

| Availability | The percentage of time that a component or system operates within designated parameters. |

| Mean time between failures (MTBF) | The average time between two or more failures of a system or component. |

| Mean time to repair (MTTR) | This is the average time it takes to repair a component or system failure. A lower MTTR means the system can be restored to service more quickly. |

| Carbon usage effectiveness (CUE) | Measures the amount of carbon dioxide emitted per kilowatt-hour of energy used by the data center. |

Overview of power usage in data centers

Understanding power usage and the importance of its monitoring

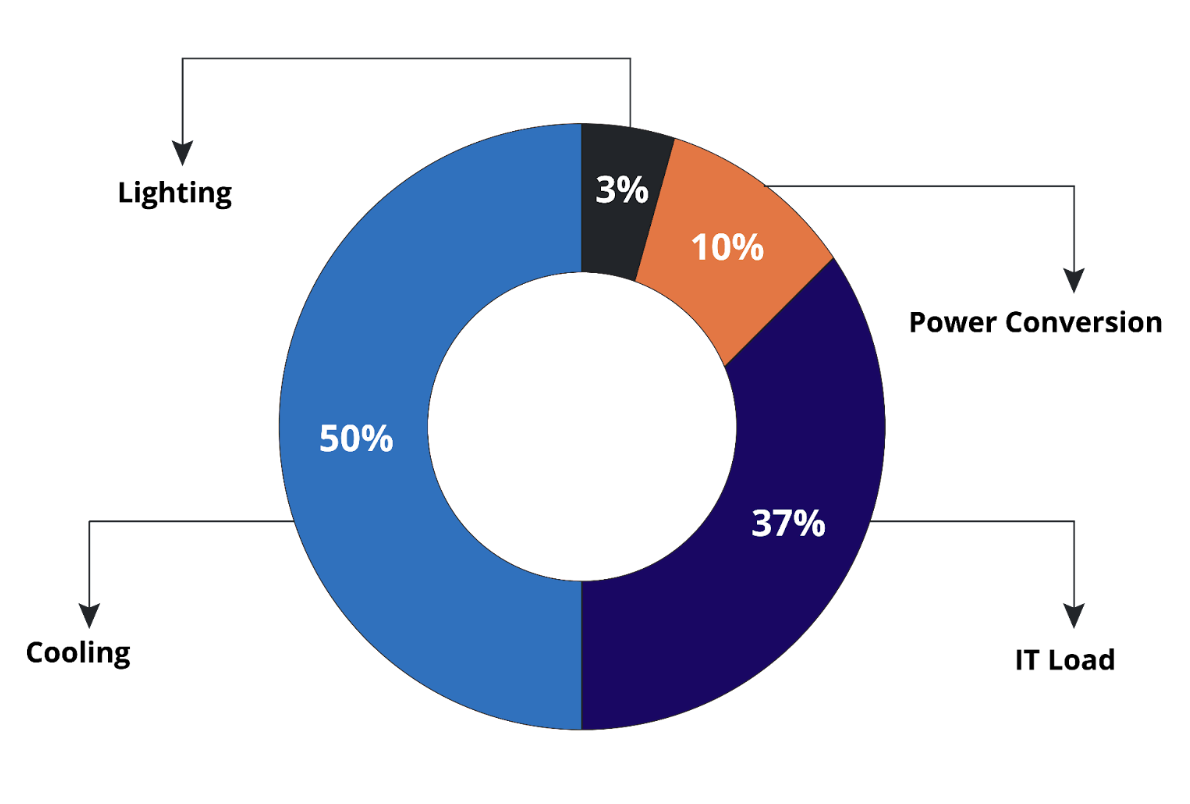

Power is used in a data center to run IT equipment (e.g., servers, storage devices, and networking equipment); cooling systems such as air conditioners, computer room air handler (CRAH) units, and chillers; and supporting infrastructure such as power distribution systems, backup power systems, lighting, and other equipment. Of these various components, cooling actually uses the most power, about 50% of the total.

Typical breakdown of power usage in a data center. (Source)

Since the level of power consumption in a data center is substantial, it represents a significant operating expense. It is essential to monitor and optimize power usage for several reasons:

- Cost management: Monitoring and optimizing power usage in data centers can help reduce operating costs associated with electricity consumption, resulting in significant savings for the organization.

- Capacity planning: Power usage monitoring can help in capacity planning and identifying potential bottlenecks or areas for improvement.

- Environmental sustainability: By managing power consumption, data centers can have their carbon footprints reduced, contributing to the sustainability efforts of the organization.

- Performance optimization: Proper power management can improve the performance of the data center by reducing the risk of power-related downtime.

Determining power requirements

Determining the requirements for data center power includes calculating the IT equipment’s power consumption, the needs of the cooling system, and how much power is being used by the supporting infrastructure. Here are some steps to determine power requirements for a data center:

- Estimate the IT load: Determine the amount of power required to run the IT equipment, including servers, storage, and networking gear.

- Determine cooling system power requirements: The cooling system’s power requirements depend on the amount of heat generated by the IT equipment and the desired temperature range within the data center. Typically, cooling systems require as much as 50% of the data center’s total power usage.

- Calculate supporting infrastructure power usage: Compute the total power needed for supporting infrastructure components such as lighting, security systems, backup power systems, etc.

- Employ redundancy: Redundancy is essential to ensure continuous uptime; it involves duplicating some components of the power system to ensure that there is always a backup in case of failure.

When determining power requirements, it is also important to consider the future in order to ensure both that the data center’s power supply can meet current power needs and that there is enough capacity to handle future growth.

Single-phase vs. three-phase power

The choice between single-phase and three-phase power in a data center depends on factors such as the size of the facility, power requirements, equipment types, and future scalability needs. Data centers with high-density computing and heavy power loads often opt for three-phase power to accommodate their energy demands effectively. This is due to the power balance achieved in three-phase systems.

Power balance refers to the equal distribution of power among the different phases or circuits. Power imbalance in a system can lead to issues such as uneven heating of equipment, increased losses, and reduced system efficiency. It’s important to note that power balance considerations become more critical in three-phase systems due to the higher power capacity and the need for equal distribution of loads among the phases, which is what makes them better suited to larger installations.

Some key differences between single-phase and three-phase power in the context of data center power are shown in the table below.

| Single-phase power | Three-phase power | |

|---|---|---|

| Voltage | Typically 120V or 240V in data center applications | Typically 208V or 415V in data center applications |

| Power capacity | Lower power capacity | Higher power capacity |

| Power distribution | Generally used for smaller equipment | Suitable for larger equipment and servers |

| Efficiency | Lower efficiency due to imbalanced power flow | Higher efficiency due to balanced power flow |

| Complexity and cost | Simpler and less expensive to install and maintain | More complex and potentially costlier to implement |

| Scalability | Limited scalability for higher power demands | Scalable for larger data center facilities and future growth |

| Industry applicability | Commonly used in smaller data centers or office environments | Commonly used in larger data centers and enterprise settings |

Power infrastructure

Let’s begin our discussion of power infrastructure with a look at some of the most common types of power equipment in data centers:

- Transformers: Transformers are used to step up or step down voltage levels between the utility power feed and the data center electrical distribution system. They ensure that the power received from the utility is compatible with the data center’s requirements.

- Power distribution units (PDUs): An essential component of data center power infrastructure used to distribute electrical power to multiple devices or equipment. A PDU serves as a central point for power distribution, receiving electricity from a primary power source and delivering it to IT load and other critical equipment.

- Power cables and connectors: These simple devices connect the power equipment to the IT equipment.

- Power breakers: Also known as circuit breakers, they are designed to protect electrical equipment from overcurrent or short-circuit conditions.

- Uninterruptible power supplies (UPSes): A UPS provides power backup to IT equipment during power interruptions such as outages, overvoltage or undervoltage conditions, sags, and swells. It consists of a battery backup system and an automatic transfer switch that switches the load to the battery when there is a power failure.

- Backup generators: Generators provide backup power in the event of prolonged power outages that exceed the capabilities of UPS systems.

- Automatic transfer switches (ATSes): An ATS is a device that automatically switches the load between primary utility power and an alternate power source such as a generator (in the event of a power disruption).

Data center tiers and the importance of redundancy and backup systems

Redundancy and backup systems minimize downtime, maintain business continuity, mitigate risk, and ensure compliance with regulatory requirements. Data centers are classified into a four-tier system, which provides a standard way to measure redundancy and backup capabilities and, thus, compare the redundancy, fault tolerance, and availability of different data center types. Higher tiers indicate more capability and less potential business disruption, but they also require more investment in infrastructure and maintenance.

The Uptime Institute designed these tiers and certifies data centers as official tier facilities. Not all data centers are certified by the Uptime Institute (which created the “tier” terminology) and hence use the term “level” instead, which is equivalent. The tiers are shown in the table below.

| Tier | Description | Expected uptime percentage | Expected annual downtime (hours) |

|---|---|---|---|

| 1 | A Tier 1 data center uses basic infrastructure and offers minimal redundancy. It is typically a single path for power and cooling and does not have redundant components. | 99.671% | 28.8 |

| 2 | A Tier 2 data center offers some redundancy and provides some level of fault tolerance. It has redundant components, such as backup power and cooling systems, but may not have redundant paths for power and cooling. | 99.741% | 22 |

| 3 | A Tier 3 data center provides a high level of redundancy and is designed to be concurrently maintainable. It has multiple paths for power and cooling and redundant components that can be taken offline without affecting loads. | 99.982% | 1.6 |

| 4 | A Tier 4 data center provides the highest level of redundancy and fault tolerance. It has multiple paths for power and cooling and fully redundant components that are designed to be fault tolerant. | 99.995% | 0.4 |

Scalability

Power scalability is a critical consideration for data center operators as they plan for growth and expansion. By considering power density, modular design, power distribution, energy efficiency, and power monitoring, data center operators can ensure that the power infrastructure is designed to accommodate increasing power demands in a cost-effective and efficient manner.

Let’s take a simple scenario to understand this. Suppose a data center is currently running at 50% of its power capacity, with 1 MW of power being used to support the IT infrastructure. However, the organization is planning to increase its IT workload, which will require an additional 500 kW of power. To accommodate this additional power requirement, the data center operator has the following options:

- Upgrade the power infrastructure.

- Use a modular data center design to add more power capacity.

- Improve energy efficiency by upgrading to more energy-efficient systems.

- Monitor power usage in the data center to identify areas of inefficiency and adjust accordingly.

Ultimately, the decision on what option to choose would be based on a comprehensive analysis of a variety of different factors. It will involve assessing future power capacity needs, budget, flexibility requirements, energy efficiency and sustainability goals, and overall business continuity requirements and timelines. A scaling project will also involve consulting with experts such as electrical engineers, data center consultants, and equipment vendors, to gather more insights and recommendations to support the expansion.

Power distribution

A data center typically receives power from the utility grid at high voltage and then distributes it to the IT equipment at a lower voltage. Power is distributed through a series of equipment connected to the data center’s servers and other gear.

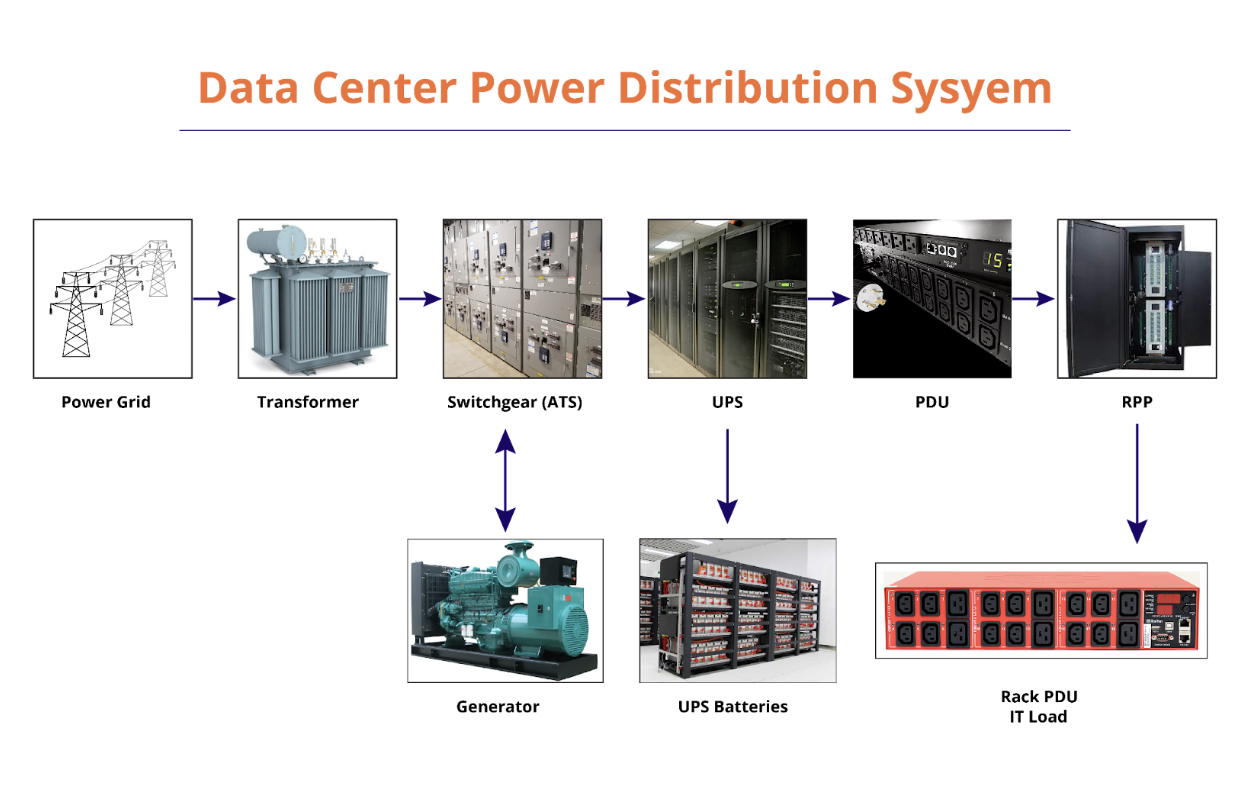

Data center power distribution system and components

Here’s a breakdown of how power is distributed in a data center:

- Utility power: The data center receives power from utility grid through a main electrical service entrance. The incoming power is typically high voltage (e.g., 13.8 kV or 33 kV) and is stepped down by transformers to a lower voltage.

- Electrical switchgear: The power is then delivered to the electrical switchgear, such as an automatic transfer switch, which distributes power to the UPS system.

- Generator: A generator acts as a backup source of power, with the ATS transferring load between backup and utility power as needed.

- UPS: The UPS system provides backup power in the event of a power outage or interruption. The UPS system also conditions the power to ensure that it is stable and free from surges, spikes, and other electrical disturbances.

There is usually a delay of 10 to 15 seconds in getting generators up and running in the event of utility power outage. UPS systems provide a cushion during this delay and then linearly transfer the load to generators as they come up.

- Power distribution units (PDUs): The power is then distributed to the IT equipment through power distribution units (PDUs).

- Remote Power Panel (RPP): An RPP distributes electrical power from PDUs to individual rack-mounted power distribution units (rPDUs) or directly to the equipment. It serves as an intermediate point between the main power source and the equipment, providing a convenient and localized power distribution solution.

Cost considerations and management

Power and cooling costs are a significant percentage of overall data center expenses. To help monitor and manage costs, most providers rely on data center infrastructure management (DCIM) software that lets them monitor and manage power consumption and other resources in real time. Using these tools in conjunction with effective monitoring strategies allows data centers to reduce energy costs and improve their power usage effectiveness (PUE) scores, which measure the efficiency of a data center’s power usage. The closer the PUE is to 1, the more efficient the data center is in its use of power.

The following are some ways that DCIM and other power monitoring strategies can help manage costs in a data center:

- Real-time monitoring

- Power usage tracking

- Temperature and humidity monitoring

- Predictive analytics to forecast future power usage

- Power load balancing

Power management best practices and tools

Effective power management requires a combination of best practices and tools, such as the following.

- Implement a power management plan: Create a comprehensive strategy that outlines procedures for managing power consumption, optimizing efficiency, and ensuring uptime.

- Conduct regular audits: Energy audits can provide a detailed analysis of the data center’s energy usage, including recommendations for improving efficiency and reducing costs.

- Use virtualization technologies: Technologies such as server virtualization can help reduce power consumption by consolidating workloads onto fewer physical servers. This not only reduces energy usage but also saves space and reduces the need for additional hardware.

- Implement power capping: Implement power capping to limit the maximum amount of power that a server or system can consume, which can prevent power spikes and improve efficiency.

- Invest in renewable energy integration systems: To reduce power expenditures and promote sustainability, many data centers are investing in renewable energy sources such as solar, wind, or hydro power.

- Use energy-efficient hardware: Using energy-efficient hardware lets data centers reduce energy consumption and save on costs in the long run.

- Implement intelligent power management: Intelligent power management tools can automatically monitor power usage and adjust power settings as needed to optimize efficiency.

- Employ power management software tools: Applications that help manage and monitor power consumption in data centers can provide real-time information on power usage and environmental conditions, allowing administrators to optimize power usage and reduce energy costs.

Here are some specific types of systems that can be valuable in managing data center power:

- Data center infrastructure (DCIM) software: A comprehensive software suite used to manage and monitor various data center infrastructure components, including power and cooling systems, IT equipment, and environmental sensors. DCIM provides real-time information on the performance of various systems and can thus help optimize energy usage, reduce downtime, and increase efficiency.

- Server power management software: This software is specifically designed for managing the power usage of individual servers within a data center. It can be used to adjust power usage settings based on server usage levels.

- Energy management systems (EMS): EMS is a broader term that refers to a range of software and hardware tools used to manage and optimize energy usage across an organization. In the context of data centers, an EMS would include both DCIM and server power management software as well as tools to manage power usage.

Current and emerging trends

The following are some up-and-coming trends impacting the world of data center power.

Green energy

Many data centers are now turning to renewable energy sources like solar and wind power to reduce their reliance on traditional power sources.

Energy storage systems

Energy storage systems, such flow batteries, thermal storage, and flywheels, can be used to store excess energy generated by renewable sources like solar and wind. The stored energy can then be used during periods of high demand or when traditional power sources are unavailable.

Artificial intelligence

AI can help identify inefficiencies and patterns in power usage, enabling data center operators to take proactive measures to reduce energy waste and improve efficiency. For example, ML algorithms can analyze data from sensors and other sources to identify opportunities to optimize cooling, lighting, and power-intensive hardware.

Liquid cooling

Liquid cooling technologies are emerging as an alternative to traditional air-cooling methods. They can be more efficient and require less energy to operate.

Direct current (DC) power distribution within the data center

Direct current (DC) power distribution within the data center refers to the use of DC electrical power to supply energy to the IT equipment and components instead of the traditional alternating current (AC) power. In this approach, DC power is distributed directly from the power source to the equipment without the need for AC-DC conversion. The use of DC power distribution within the data center can have several benefits:

- Energy efficiency: DC power distribution eliminates the need for multiple AC-DC conversions, which reduces energy losses associated with conversion processes.

- Simplified power infrastructure: DC power distribution simplifies the power infrastructure by eliminating the need for multiple AC PDUs and transformers.

- Increased rack density: DC power distribution allows for higher rack densities and increased power delivery to IT equipment.

- Improved reliability: DC power distribution can enhance reliability by eliminating potential points of failure associated with AC-DC conversion components.

Industry energy standards

- ASHRAE TC 9.9: Provides guidelines for data center environmental conditions, including temperature and humidity levels.

- The Green Grid: Provides standards and best practices for energy-efficient data center design and operation.

- Energy Star: Provides guidelines for energy-efficient equipment and practices.

- ISO 50001: Provides a framework for implementing an energy management system to optimize energy usage.

- IEC and NEMA: Provide standards for electrical equipment, including ODUs, UPSes, and other devices used in data centers.

- Leadership in Energy and Environmental Design (LEED): Primarily focuses on overall building sustainability and energy efficiency criteria.

- EU Code of Conduct: Provides guidelines and best practices to optimize energy consumption, reduce greenhouse gas emissions, and improve overall operational efficiency.

Recommendations and conclusion

Data center power management is a critical aspect of operating a modern data center. Power consumption represents a significant portion of a data center’s operating costs and environmental impact, so it’s important to adopt best practices and use the latest tools and technologies to optimize power usage.

Some key recommendations for effective data center power management include the following:

- Conduct regular energy audits to identify opportunities for improvement.

- Use DCIM to monitor and optimize power usage.

- Implement virtualization technology to help reduce power usage and increase energy efficiency.

- Adopt a power management plan that includes monitoring, measurement, and optimization strategies.

- Invest in energy-efficient equipment.

- Implement energy storage and renewable energy solutions to reduce dependence on the grid and increase resilience.

- Leverage AI and machine learning to optimize power usage and reduce costs.

- Use DC power distribution within the data center to improve efficiency and reliability.

- Educate data center operations staff on power management and energy efficiency. This is a crucial best practice that helps eliminate energy waste, optimize power usage, and reduce operating costs and can be implemented through training programs, workshops, and ongoing education initiatives.

As the data center industry continues to grow and evolve, it will be increasingly important to adopt these strategies and use the latest tools and technologies to ensure that data centers are able to operate efficiently, effectively, and sustainably for years to come.